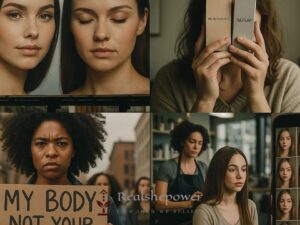

In 2025, artificial intelligence (AI) is transforming the world, from healthcare to entertainment. But not all changes are positive, especially for women. A growing problem is the misuse of AI to create harmful content, such as fake nude images of women. This issue gained attention in Japan, where authorities arrested individuals for selling AI-generated nude images of fictional women. This isn’t just a local issue—it’s a global threat to women’s safety, dignity, and mental health. In this article, we’ll dive into why AI exploitation is a major women’s issue in 2025, its impact on society, real-world examples, and practical steps to fight it.

What Is AI Exploitation of Women?

AI exploitation occurs when people use AI tools to create harmful or offensive content targeting women. One common form is “deepfakes,” which are fake images or videos made with AI that look incredibly real. In 2025, AI technology is so advanced that anyone with a computer and basic skills can create these images. This makes it easy for bad actors to produce and share harmful content online.

In Japan, police arrested a group of men for selling AI-generated nude images for profit. These images didn’t depict real women, but they still caused harm by promoting a culture that objectifies women. The images were shared on websites and social platforms, making it hard to control their spread. This case shows how AI can be weaponized against women, even when the victims are “fake.”

This problem isn’t limited to Japan. Similar cases have appeared in the United States, India, and Europe, where AI-generated content has been used to harass, shame, or exploit women. The accessibility of AI tools in 2025 makes this a widespread issue that demands urgent action.

Why Is AI Exploitation a Women’s Issue in 2025?

Women have long faced challenges like harassment, discrimination, and objectification. AI exploitation adds a new, dangerous layer to these struggles. Here are four key reasons why this is a critical women’s issue in 2025:

- Undermines Dignity: AI-generated images, even of fictional women, reduce women to objects. They create a culture where women’s bodies are seen as products for entertainment or profit, reinforcing harmful stereotypes.

- Difficult to Control: AI tools are widely available, and the content they produce is hard to trace or remove. Once an image is online, it can spread across platforms like X or Telegram in seconds, making it nearly impossible to stop.

- Mental Health Toll: Knowing that fake images exist can make women feel vulnerable, anxious, or ashamed. This fear can discourage them from participating in online spaces, limiting their freedom of expression.

- Real-World Harm: In some cases, AI images are used to target real women, such as celebrities or public figures. For example, in 2024, deepfakes of actresses like Scarlett Johansson went viral, causing distress and sparking lawsuits. In 2025, these incidents are even more common.

These factors show why AI exploitation is not just a tech problem but a human rights issue that disproportionately affects women.

Real-World Examples of AI Exploitation

To understand the scope of this issue, let’s look at a few examples from 2025 and recent years:

- Japan’s AI Image Scandal: As mentioned, men in Japan were arrested for creating and selling AI-generated nude images. These images were marketed as “customizable” products, showing how AI can turn women’s likeness into commodities.

- Deepfakes in Politics: In 2025, female politicians in India and the United States reported AI-generated videos that falsely depicted them in compromising situations. These videos were used to discredit their campaigns, showing how AI can undermine women’s leadership.

- Social Media Harassment: On platforms like X, women influencers have faced AI-generated images that mimic their appearance in harmful ways. For example, a popular fitness influencer in the UK found fake nude images of herself circulating online, leading to public shaming and mental health struggles.

- School Incidents: In 2024, high school students in the U.S. used AI apps to create nude images of female classmates, causing widespread distress. In 2025, similar cases continue, highlighting how young women are also targets.

These examples show that AI exploitation affects women of all ages, professions, and backgrounds. It’s a problem that crosses borders and cultures, making it a global priority.

How AI Exploitation Impacts Society

AI exploitation doesn’t just harm individual women—it affects society as a whole. When women feel unsafe, they may avoid certain spaces, like social media, tech careers, or public roles. This means society loses their talents, perspectives, and contributions. For example, studies in 2025 show that women make up only 28% of tech workers globally, partly because of hostile online environments.

This issue also fuels a culture of disrespect. When AI-generated images normalize objectifying women, it can lead to real-world consequences, like increased harassment or violence. In 2025, women are already fighting for equal pay (the gender pay gap is still 14% globally) and representation (only 15% of Republican Congress members in the U.S. are women). AI exploitation adds another barrier to their progress.

Finally, this issue erodes trust in technology. If women can’t trust AI tools or online platforms, they may avoid using them. This limits innovation and economic growth, as women’s participation is essential for solving global challenges like climate change or healthcare access.

What Can We Do to Stop AI Exploitation?

The good news is that we can fight AI exploitation with collective action. Here are six practical steps to protect women in 2025:

- Stronger Laws: Governments must create clear laws to punish those who make or share harmful AI content. For example, the European Union is working on AI regulations in 2025 that could set a global standard.

- Educate the Public: Schools, workplaces, and communities should teach people about AI’s risks and benefits. Understanding how deepfakes work can help people spot and report them.

- Support Women’s Voices: Platforms like X, TikTok, and Instagram can amplify women’s stories and campaigns against AI exploitation. Hashtags like #StopAIExploitation can build awareness.

- Demand Ethical AI: Tech companies must design AI tools with safeguards to prevent misuse. They should also hire more women in AI development to ensure diverse perspectives.

- Report Harmful Content: If you see AI-generated images online, report them to the platform immediately. Most platforms in 2025 have reporting tools for deepfakes.

- Fund Advocacy Groups: Support organizations like the Cyber Civil Rights Initiative, which fight for women’s online safety. Donations or volunteering can make a big impact.

These steps require effort from individuals, companies, and governments, but they can create lasting change.

The Future of Women’s Safety in the AI Era

In 2025, AI is a double-edged sword. It can create art, improve medicine, or connect people—but it can also harm. If we don’t address AI exploitation now, it will grow worse, affecting more women and eroding trust in technology. Women deserve to feel safe, whether they’re posting on X, working in tech, or running for office.

Protecting women from AI exploitation is about more than stopping fake images. It’s about building a future where technology respects everyone. Women’s ideas and leadership are critical for solving global problems, from poverty to environmental crises. By ensuring their safety, we unlock their potential to shape a better world.

Call to Action: Take a Stand Today

AI exploitation is one of the biggest women’s issues in 2025, but we have the power to stop it. Start by learning more about AI and its risks—check out resources from groups like Women in AI or the Electronic Frontier Foundation. Share this article on social media platforms with hashtags like #WomensSafety or #StopAIExploitation to spread the word. Talk to your friends, family, or coworkers about why this matters.

You can also take action by reporting harmful content, supporting women’s rights organizations, or advocating for stronger laws in your country. Every small step counts. Let’s make 2025 a year where women are safe, respected, and empowered to thrive in the AI era. Join the fight today!